How To Eliminate The Hidden Project Plan Via Agile Adoption

You would think that within that behemoth of a project plan required of waterfall processes there would be a page or two outlining fixes and time needed to tidy up. But for the most part…there isn’t. Bugs, misses, lost features are part of what I like to call, the “hidden project plan,” time spent after […]

5 Factors To Help Prioritize Your Scrum Product Backlog

It’s been said that your product backlog is the ultimate to-do list. However, have you ever tried to complete a to-do list without prioritizing each task first? Or going to the grocery store without grouping items? You couldn’t pay me to go to a grocery store with a chaotic list…especially on a Sunday. Madhouse. This […]

Blog Series: 10 Agile Transformation Pitfalls and How to Address Them

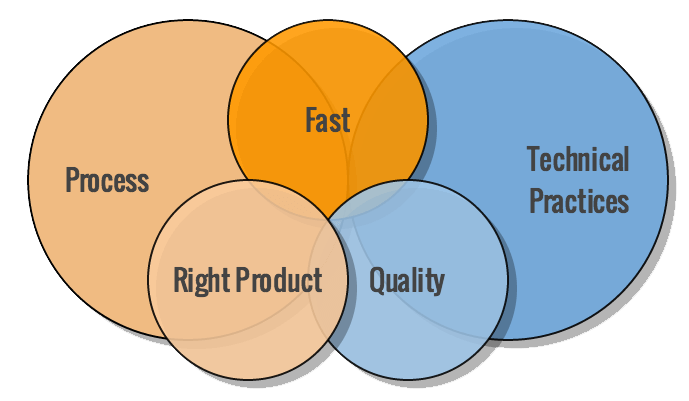

The trend of IT and software organizations transitioning to Agile continues to grow. While Agile is a better way to get quality work done faster, the transition to Agile can be a weary road to travel. In this series, we’ll explore 10 of the most common pitfalls we see in Agile transformations and tips on […]

How to Fight the Chaos Phase of Agile Adoption and Win with an Agile Coach

Why do you need an Agile Coach? Developed by Virginia Satir, the Satir Change Model illustrates the impact a well-assimilated change has on group performance over time. With its curved check trend, the Satir Change Model shows that the adoption of new concepts is not always smooth. Performance typically hits a point much lower than […]

Agile Velocity Registers 52% Growth in Coaching & Training Revenue in First Half of 2015

Escalating Demand Creates Need to Expand Workforce by Adding New Key Hire AUSTIN, TEXAS – August 3, 2015 – Agile Velocity, a Texas-based provider of Agile services, including Agile transformations, assessments, training, coaching and recruiting, announced today that it has been experiencing ongoing company growth, evidenced by rising revenue. To support the increasing demand for […]

User Stories that Product Owners Often Overlook

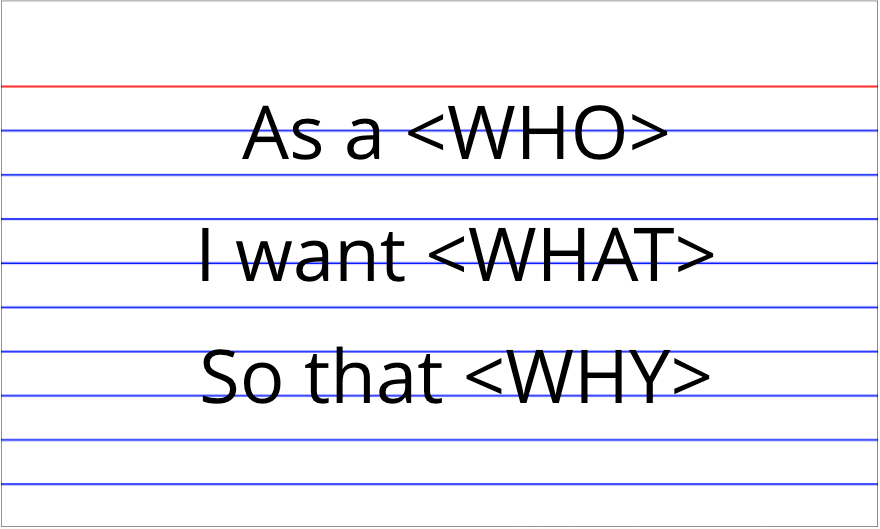

Product Owners typically express application requirements from a user-centric perspective. This usually means that non-functional requirements are not a primary focus. However, Product Owners often forget that they and the development team are also potentially a class of “user” of features in the application being developed. The PO and team might want features to help with […]

Practical Agile Tips

Amazon lists over 3,000 books related to Agile Software Development and there is no shortage of information on the internet about how best to implement Agile, but I think there are still small improvements and tips I don’t see often mentioned that I think are useful. Practical Agile Tips Biggest impact on success of a team is […]

Why Invest in a Dedicated Product Owner?

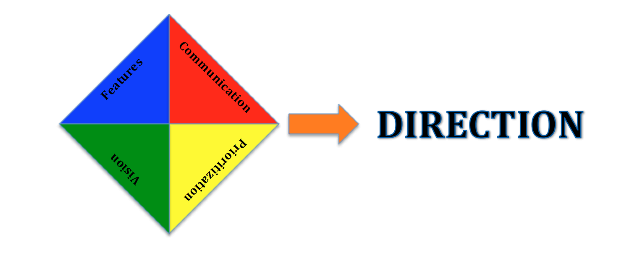

In Agile methodology, the role of the Product Owner is critical to the overall effectiveness and success of the development team. When a company is looking to increase the time to market and productivity, a dedicated product owner is one solution. Using the analogy of a sailing ship, the Product Owner is the Navigator – […]

Role of a Coach starts with Building Team Trust and Alignment

David Hawks, CST, CSC, presented the Coach Role starts with Building Trust and Alignment at the Scrum Alliance’s Scrum Gathering. The Coach Role presentation includes: Understand the role of coaching Learn five dysfunctions of a team Realize 3 tips to improve trust with a team Understand team alignment How to establish team values Learn a […]

Tech Debt Game

David Croley, Agile Player-Coach, presented Tech Debt – Understanding its Sources and Impacts Through a Game at the Keep Austin Agile conference. Definition of Technical Debt: A useful metaphor similar to financial debt “Interest” is incurred in the form of costlier development effort Can be paid down through refactoring the implementation Unlike monetary debt, it is difficult […]